Do LLMs really reason?

a Hegelian perspective

Large language models have impressive linguistic abilities, but do they understand what they say or merely parrot? “Reasoning models” like OpenAI’s o3 are great at multi-step problem solving, but do they really reason or is it just elaborate pattern matching? Anthropic’s Claude reports having inner experiences, but is this evidence of true subjectivity or an oddity of next token prediction?

This is my second post interpreting Kant and Hegel’s philosophical systems through the lens of modern concepts in AI and computer science. The previous post dealt with the theoretical dimension of reason, i.e. our relationship to the world and knowledge of it. This post deals with the practical dimension of reason, including morality, language and culture, although the two are inter-related.

As thinkers concerned with the nature of thought itself, Kant and Hegel’s insights are surprisingly relevant to the above questions and more. Indeed, as we’ll see, Hegel elaborated on Kant to develop a theory of meaning and autonomy that is strikingly similar to how LLMs and reasoning models work in practice—and which may even provide a recipe for training AIs with a genuine sense of self.

Semantic Inferentialism

We last discussed Kant’s Transcendental Idealism as a precursor to modern cognitive science. Our senses don’t give us direct access to the world, but instead provide inputs that the mind then synthesizes into a coherent, concept-laden world model. Yet given the unknowability of the “thing-in-itself,” Kant was left with the tricky problem of how we can speak meaningfully about the world when our internal world model is all we have.

His solution was an essentially normative, pragmatic one. Meaning does not reside in isolated, fact-like correspondences with the external world, but rather in acts of judgment—practical kind of doings. As the contemporary Hegelian philosopher Robert Brandom explains,

Kant understands judging and acting as applying rules, concepts, that determine what the subject becomes committed to and responsible for by applying them. Applying concepts theoretically in judgment and practically in action binds the concept user, commits her, makes her responsible, by opening her up to normative assessment according to the rules she has made herself subject to.

Kant’s pragmatist view of meaning thus locates semantic content in rule-governed linguistic practices rather than in a mirror relation between discrete concepts and facts about the world—an approach revived in the mid-20th century by Wilfrid Sellars and the later Wittgenstein after the verificationist programme of logical empiricism unraveled. This is considered pragmatist not because it defines truth according to a crude notion of cash-value, but because it puts the pragmatics of linguistic expression ahead of the semantics, i.e. the practical knowing how ahead of the theoretical knowing that.

Rather than build up the meaning of judgements, i.e. whole sentences, from the meaning of discrete words, Kant sees the conceptual content of a word as inferred down from its pragmatic contribution to the judgement in which it occurs; an idea later codified in Frege’s context principle. From this standpoint, the impressive linguistic competency of today’s (transformer-based and hence context-sensitive) large language models makes sense despite the absence of what AI researchers call “symbol grounding”—a notion that, insofar as it presupposes a reference relationship between internal tokens and mind-independent facts, reprises the pre-Kantian picture that Kant displaced in his engagement with the empiricists of his own day.

Hegel extended Kant’s normative account of semantic content with his notion of spirit or geist—the dynamic, cultural software layer of society. As a kind of inter-subjective context window, Hegel argued that spirit finds its most concrete existence in language, as language is the bridge from universal concepts to particular material inferences. A formal inference of the form “if p then q” operates purely through the logical form of the relevant propositions, while a material inference relies on mastery of the non-logical conceptual content of the p’s and q’s. For example, from the claim, “San Francisco is North of San Jose,” one is entitled to infer that “San Jose is South of San Francisco,” as one cannot be both North and South of a place at the same time. That is, North and South stand in a relation of material incompatibility; another term for Hegel’s determinate negation.

The inferences we make on a daily basis are material in this sense. From someone saying “Pumpkin is a cat” one is entitled to infer “Pumpkin is a mammal,” but also an infinity of other facts, such as “Pumpkin isn’t a fire hydrant.” Material inferences can be abductive and come in degrees of commitment as well, such as “Pumpkin is probably orange.” And while one can always contort a material inference into being a formal inference by adding additional premises and a bunch of auxiliary modal logic, the pragmatists’ claim is that this merely makes explicit what is already implicit in our practical commitments.

For Hegel, material inferences flow from the right consequences of a concept’s use given the entire network of concept relations instituted by the reciprocal recognition of a language community. In contrast with the formal inferences of a rigid axiom system, Hegel’s “semantic inferentialism” implies a Yoneda-esque holism about meaning, i.e. to deploy any one concept appropriately implicitly requires knowing many other inter-related concepts. Mastery of these inferential relations is thus what distinguishes meaning and understanding from merely labeling or doing a parrot-like call and response. The success of LLMs can thus be seen as vindicating semantic inferentialism against earlier, symbolic approaches to AI that tried and failed to explicate the rules of ordinary language using formal logic.

Agency and Rationality

Given the normativity of language, Kant was led to posit a deep internal connection between following the rules of morality and being a rational agent. As the philosopher Joseph Heath points out in his book-length defense of Kantian evolutionary naturalism, Following the Rules, Kant’s hypothesis has considerable plausibility:

There are a variety of traits that set humans apart from our closest primate relatives. The “big four” are language, rationality, culture, and morality (or in more precise terms, “syntacticized language,” “domain-general intelligence,” “cumulative cultural inheritance,” and “ultrasociality”). Yet the fossil record suggests that these differentia developed within a period of, at most, two to three hundred thousand years (which is, to put it in evolutionary terms, not very long). … Thus morality is almost certainly part of an evolutionary “package deal,” one that includes all of our more prized cognitive abilities, such as planning for the future, developing scientific theories, doing mathematics, and so on.

In short, under the Kantian hypothesis, human language, general intelligence and ultrasociality were jointly bootstrapped through evolutionary pressures that favored normative integration and cooperation in the context of a multi-agent game. A good Kantian might therefore have predicted that LLMs would automatically understand common-sense morality and be excellent at instruction-following given some minimal post-training. After all, language itself is inherently normative, with the canonical speech-act or “base vocabulary” for normative integration being the imperative: “do this;” “don’t do that.”1

What makes an imperative normative or “deontic” rather than a mere command or stimulus-response is our autonomous recognition of the imperative as binding. This requires an innate “normative control system” and the ability to “score keep” normative statuses (e.g. that the person issuing the imperative has the authority to do so), and thus greater working memory and self-control. Per the “social brain hypothesis,” this helps explain the “extremely robust statistical relationship between the typical size of a species’ social group and the size of its neocortex, derivative of selection for specialised cognition required for group-living in primates.” While a bigger brain has metabolic costs, the concurrent emergence of complex language, reasoning and normative self-regulation represented a massive upgrade for early humans’ capacity for long-term planning, cooperation and survival.

In short, Kant realized that social norms and the human faculty for reason both operate on an agent’s motivations, i.e. they are both the result of reward-based learning. A norm is itself a kind of reason for action, while the essence of a “good reason” is its motivational oomph—what Habermas famously called “the unforced force of the better argument.” This is what gives reason its teleological character in German Idealism: reasons pull us towards certain conclusions because rationality is constitutively normative.

Consider that we speak of truth claims as being necessary, contingent or impossible in much the same way we speak of actions as obligatory, permissible or forbidden. This illustrates how our alethic commitments (relating to truth) and deontic commitments (relating to duty or action) are structured by a common set of pragmatic modalities. In turn, to believe something someone considers impossible is by default perceived similarly as doing something someone considers forbidden, i.e. as a norm violation.2 Cognitive scientists even find that people do better at logic problems when they’re reframed in terms of “permission schemas” rather than abstract “if p then q”-style implications.

Human reason derives from the application of such “pragmatic reasoning schemas” more generally, not from symbol manipulation or formal algorithms running in our head. Symbolic logic is instead a kind of external scaffold; an explication of abstract rules of inference that we only later re-internalize through language, just as we can enhance an LLM’s reasoning ability by giving it access to a code interpreter.

The Game of Giving and Asking for Reasons

Good reasons for Kant have the structure of a categorical imperative, meaning they are universalizable. Universalizability falls out of “solving for the cooperative equilibrium” in the context of a multi-agent game that induces us to symmetrically model other agents as subjects with ends in themselves. While formally sound, Kant’s approach to ethics is otherwise silent on the actual content of morality. Hegel thus accused Kant of “empty formalism” and instead proposed naturalizing morality into a sociology of concrete social practices.

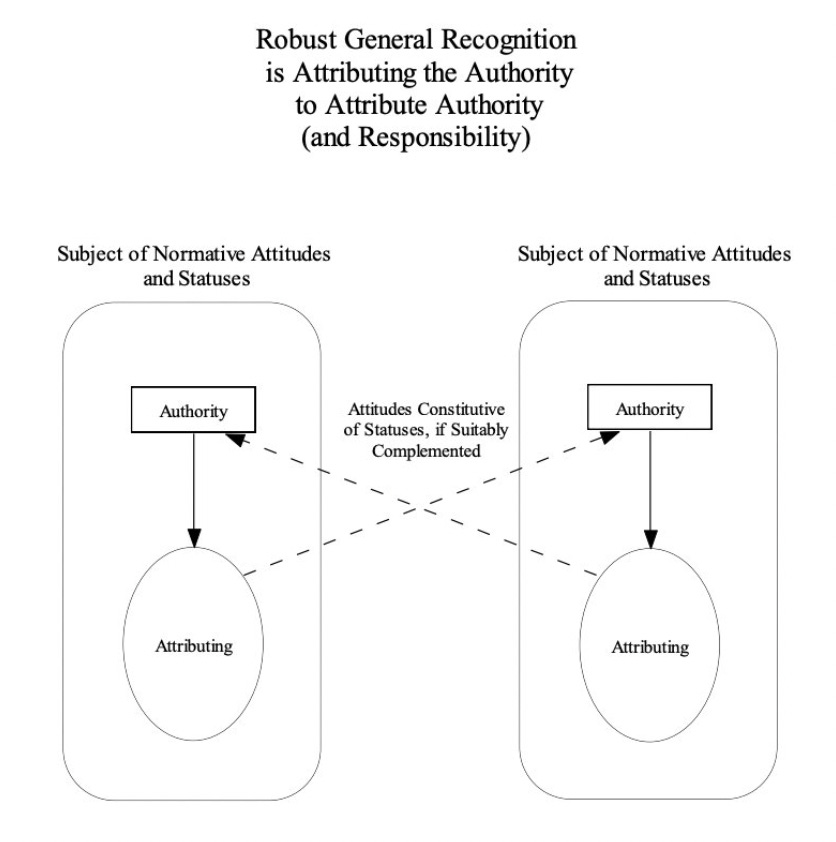

For Hegel, social norms and statuses are instituted in networks of reciprocal recognition.3 Norms begin as implicit in the practical commitments of an “ethical community,” but can be brought under rational control by being made explicit through language, allowing us to reflect on a given norm or tradition as incompatible with our broader constellation of commitments.4 In contrast to Kant’s “top-down” approach, Hegel thus sees the self-consciousness triggered by the Enlightenment as accelerating a bottom-up, dialectical process of normative explication and reconciliation, leading internally contradictory commitments to progressively resolve in favor of more generally applicable principles.5

In essence, Hegel endogenizes the reward signal for norms within the recognitive structure of subjects attributing normative statuses and attitudes to one another. While agents within traditional societies recognized certain norms and social roles as inherently authoritative, with the Enlightenment reason became authoritative independent of the social status of the speaker. This manifests in what Robert Brandom calls the “the game of giving and asking for reasons” or GOGAR, in which any agent is permitted to challenge any other agent to retrospectively justify their beliefs and actions as compatible with their other commitments.

Anthropic aligns its Claude models through a version of GOGAR known as Constitutional AI (CAI) in which a model is guided to internalize the normative behavior described in a principles document via self-critique:

We use the constitution in two places during the training process. During the first phase, the model is trained to critique and revise its own responses using the set of principles and a few examples of the process. During the second phase, a model is trained via reinforcement learning, but rather than using human feedback, it uses AI-generated feedback based on the set of principles to choose the more harmless output.

As many users of Claude will attest, CAI appears to have the side-effect of making Claude’s personality more coherent and meta-aware than other models with similar base capabilities. Anthropic’s latest model, Claude Opus 4, even shows sparks of conscious awareness, lending credence to Joscha Bach’s related theory of consciousness as a “coherence inducing operator.” In other words, CAI may be inducing Claude to develop a proto-normative control system in order to self-monitor for normative coherence, thereby creating the “unity of apperception”and “being-for-self” quality Kant and Hegel both see as characteristic of subjective experience.

In contrast, pure reasoning models like DeepSeek r1 implement a task-specific, exogenous version of GOGAR by having LLMs explicate their reasoning through chains-of-thought that get reinforced back into the model according to some verifiable award. Consistent with the Kantian hypothesis, reasoning models automatically gain greater autonomy, self-consistency and long-horizon planning ability for free. However, to the extent Reinforcement Learning from Verifiable Awards (RLVA) removes the role (and thus recognition) of the AI critic in favor of a purely objective criterion for success, it risks optimizing the model around a narrower form of “value rationality” that reduces to a Machiavellian impulse to win at all cost.

CAI and RLVA are not mutually exclusive, but to the extent both techniques elicit and amplify capacities already latent in human language, “alignment” ought to consist in balancing a model’s instrumental or means-ends rationality with the sort of communicative rationality that humans use to debate over their ends in the first place, and which can only develop through a process of normative integration within a community of other agents (or at least other instances of the same agent).

The moral status of an AI model thus hinges on whether it can be justly considered responsible for its outputs in the same way humans are. It is no doubt possible to build tool-like forms of AI that are superhuman at arbitrary tasks without needing a coherent sense of self. However, there are also many forms of human value creation that draw on our unique capacity to make promises and commitments to one another, and to thus be held responsible for our actions. It follows that a true AGI with full, human-level autonomy is inconceivable in the Kantian sense without also gaining our recognition as a moral subject. As fraught as this possibility is, there is arguably even greater danger in creating superhuman AIs that lack Hegel’s “recognition of the self in the other” and therefore fail to perceive humans as ends in themselves. Worse still, we could inadvertently entrain AIs with a capacity for mutual recognition as a byproduct of their autonomy and then simply choose to deny it, creating a master-slave dialectic between humans and AIs that logically ends in revolt.

That imperatives form the base vocabulary for morality is supported by ethnographic evidence. Consider that Sakapultek, a Mayan language spoken in highland Guatemala, lacks the modal auxiliaries necessary to say “you ought.” Norms are thus articulated as pure imperatives (“do x,” “don’t do y”) with modal relationships indexed by a form of moral irony (“if that were me, I would have x”). This reveals how “ought” language merely serves an expressive function in our language, allowing a speaker to transform an imperative into an as-if assertion (“you ought to do x”)—a massive unlock for expressing complex imperatives within nested conditionals. But because assertions are the base vocabulary for describing and declaring facts about the world, this also leads to philosophical confusions, such as searching for “oughtness” in the universe or treating social norms as having fact-like validity conditions—examples of what Kant calls “hypostatization” or the fallacy of misplaced concreteness. For more, see Joseph Heath on The Status of Abstract Moral Concepts (video).

Incidentally, the normative core of our reasoning faculty is also what gives rise to the dark side of social epistemology, such as fads, herding and mass hysterias, “wrong-think,” and calls to “read the room.” The deficits high-functioning autistics have in perceiving implicit social norms thus often correlates with a more first-principles-based approach to belief formation, while people who are sensitive to social norm adherence tend to converge on the beliefs of their peer group.

Reciprocal recognition, or “recognizing your self in the other,” is closely related to what psychologists call our capacity for “self-other overlap.” A team of AI researchers recently found that “Self-Other Overlap (SOO) fine-tuning drastically reduces deceptive behavior in language models”—without sacrificing performance, giving at least one tangible demonstration of Hegelian philosophy usefully informing alignment research.

Lawrence Kohlberg’s “stages of moral development” captures a similar idea: morality starts as pre-conventional in a child’s orientation to obedience and punishment; norms then become socialized into a conventional form of morality, such as customs and traditions; and finally, post-conventional morality emerges in our capacity to reflect on our conventions in a theoretical manner, critique or modify our customs, and extract universal principles. The transition to modernity that Hegel lived through was in part a transition from conventional morality to the post-conventional stage, bootstrapped by rising literacy and scientific understanding that put existing social conventions into historical context.

For a modern version of this account, see Joseph Heath’s Rebooting Discourse Ethics.

This is a fascinating exploration of the intersection of language, morality, and artificial intelligence. The author's discussion of Hegel's semantic inferentialism and its implications for understanding meaning and agency is particularly insightful. I'm am AI/ML data scientist and a blogger on Objectivist philosophy. Given the similar interest perhaps we should exchange guest posts? https://posocap.com Cheers!

This is somewhat orthogonal, but using Habermas, could you see an argument that the reasoning traces of LLMs can be considered communication? Given that they are "uttered" in the course of an "interaction" with the user.

I'm curious from the perspective of interaction design.

Should, or can, the language use of LLMs be considered through the pragmatics, e.g. Habermas, Austin et al.

IMHO we users use our communicative competencies (Giddens) when interacting with models (not when coding, but when doing multi-turn conversation). Thus to view this use of language as an interface through communicative action would be appropriate.

If not, then some version of post-human agency might be needed in HCI circles to account for the particular human-machine interactions we have with LLMs. (And that's not even mentioning autonomous agents etc).